Fitting A Linear Curve (Line)

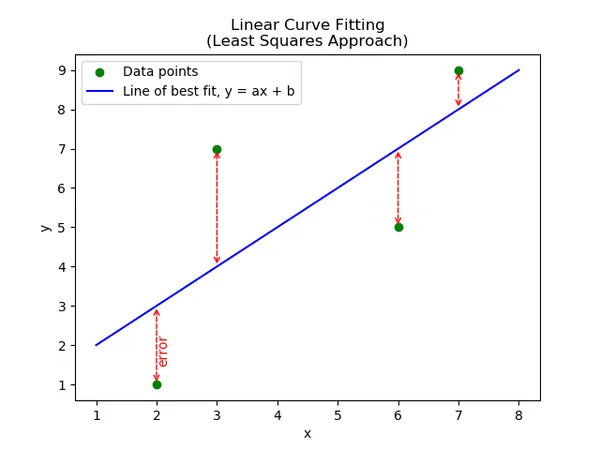

Fitting a linear curve (a line!) to a set of data is called linear regression. Typically, we want to minimize the square of the vertical error between each point and the line. The following graph shows four data points in green, and the calculated line of best fit in blue:

We can write an equation for the error as follows:

e r r = ∑ d i 2 = ( y 1 − f ( x 1 ) ) 2 + ( y 2 − f ( x 2 ) ) 2 + ( y 3 − f ( x 3 ) ) 2 = ∑ i = 1 n ( y i − f ( x i ) ) 2 \begin{align}

err & = \sum d_i^2 \\

& = (y_1 - f(x_1))^2 + (y_2 - f(x_2))^2 + (y_3 - f(x_3))^2 \\

& = \sum_{i = 1}^{n} (y_i - f(x_i))^2

\end{align} err = ∑ d i 2 = ( y 1 − f ( x 1 ) ) 2 + ( y 2 − f ( x 2 ) ) 2 + ( y 3 − f ( x 3 ) ) 2 = i = 1 ∑ n ( y i − f ( x i ) ) 2 where:n n n x , y x, y x , y f ( x ) f(x) f ( x )

Since we want to fit a straight line, we can write f ( x ) f(x) f ( x )

f ( x ) = a x + b f(x) = ax + b f ( x ) = a x + b Substituting into above:

e r r = ∑ i = 1 n ( y i − ( a x i + b ) ) 2 err = \sum_{i = 1}^{n} (y_i - (ax_i + b))^2 err = i = 1 ∑ n ( y i − ( a x i + b ) ) 2 How do we find the minimum of this error function? We use the derivative. If we can differentiate e r r err err

Because we are solving for two unknowns, a a a b b b

∂ e r r ∂ a = − 2 ∑ i = 1 n x i ( y i − a x i − b ) = 0 \frac{\partial err}{\partial a} = -2 \sum_{i=1}^{n} x_i(y_i - ax_i - b) = 0 ∂ a ∂ err = − 2 i = 1 ∑ n x i ( y i − a x i − b ) = 0 ∂ e r r ∂ b = − 2 ∑ i = 1 n ( y i − a x i − b ) = 0 \frac{\partial err}{\partial b} = -2 \sum_{i=1}^{n} (y_i - ax_i - b) = 0 ∂ b ∂ err = − 2 i = 1 ∑ n ( y i − a x i − b ) = 0 We now have two equations and two unknowns, we can solve this! Lets re-write the equations in the form C 1 a + C 2 b = C 3 C_1 a + C_2 b = C_3 C 1 a + C 2 b = C 3

( ∑ x i 2 ) a + ( ∑ x i ) b = ∑ x i y i ( ∑ x i ) a + ( n ) b = ∑ y i \begin{align}

& (\sum x_i^2) a & + (\sum x_i) b &= \sum x_i y_i \\

& (\sum x_i) a & + (n) b &= \sum y_i

\end{align} ( ∑ x i 2 ) a ( ∑ x i ) a + ( ∑ x i ) b + ( n ) b = ∑ x i y i = ∑ y i We will put this into matrix form so we can easily solve it:

[ ∑ x i 2 ∑ x i ∑ x i n ] [ a b ] = [ ∑ x i y i ∑ y i ] \begin{bmatrix}

\sum x_i^2 & \sum x_i \\

\sum x_i & n

\end{bmatrix}

\begin{bmatrix}

a \\ b

\end{bmatrix} =

\begin{bmatrix}

\sum x_i y_i \\

\sum y_i

\end{bmatrix} [ ∑ x i 2 ∑ x i ∑ x i n ] [ a b ] = [ ∑ x i y i ∑ y i ] We solve this by re-arranging which involves taking the inverse of x):

x = A − 1 B \mathbf{x} = \mathbf{A^{-1}} \mathbf{B} x = A − 1 B Thus a linear curve of best fit is:

y = x [ 0 ] x + x [ 1 ] y = x[0] x + x[1] y = x [ 0 ] x + x [ 1 ] See https://github.com/gbmhunter/BlogAssets/tree/master/Mathematics/CurveFitting/linear for Python code which performs these calculations.

Worked Example

Find the line of best fit for the following points:

( 2 , 1 ) ( 3 , 7 ) ( 6 , 5 ) ( 7 , 9 ) (2, 1) \\ (3, 7) \\ (6, 5) \\ (7, 9) ( 2 , 1 ) ( 3 , 7 ) ( 6 , 5 ) ( 7 , 9 ) We will then find the values for each one of the four elements in the A \mathbf{A} A

A 11 = ∑ x i 2 = 2 2 + 3 2 + 6 2 + 7 2 = 98 A 12 = ∑ x i = 2 + 3 + 6 + 7 = 18 A 21 = ∑ x i = 2 + 3 + 6 + 7 = 18 A 22 = n = 4 \begin{align}

A_{11} &= \sum x_i^2 = 2^2 + 3^2 + 6^2 + 7^2 = 98 \\

A_{12} &= \sum x_i = 2 + 3 + 6 + 7 = 18 \\

A_{21} &= \sum x_i = 2 + 3 + 6 + 7 = 18 \\

A_{22} &= n = 4

\end{align} A 11 A 12 A 21 A 22 = ∑ x i 2 = 2 2 + 3 2 + 6 2 + 7 2 = 98 = ∑ x i = 2 + 3 + 6 + 7 = 18 = ∑ x i = 2 + 3 + 6 + 7 = 18 = n = 4 And now find the elements of the B \mathbf{B} B

B 11 = ∑ x i y i = 2 ∗ 1 + 3 ∗ 7 + 6 ∗ 5 + 7 ∗ 9 = 116 B 21 = ∑ y i = 1 + 7 + 5 + 9 = 22 \begin{align}

B_{11} &= \sum x_i y_i = 2*1 + 3*7 + 6*5 + 7*9 = 116\\

B_{21} &= \sum y_i = 1 + 7 + 5 + 9 = 22

\end{align} B 11 B 21 = ∑ x i y i = 2 ∗ 1 + 3 ∗ 7 + 6 ∗ 5 + 7 ∗ 9 = 116 = ∑ y i = 1 + 7 + 5 + 9 = 22 Plugging these values into the matrix equation:

[ 98 18 18 4 ] [ a b ] = [ 116 22 ] \begin{bmatrix}

98 & 18 \\

18 & 4

\end{bmatrix}

\begin{bmatrix}

a \\ b

\end{bmatrix} =

\begin{bmatrix}

116 \\

22

\end{bmatrix} [ 98 18 18 4 ] [ a b ] = [ 116 22 ] We can then solve x = A − 1 B \mathbf{x} = \mathbf{A^{-1}}\mathbf{B} x = A − 1 B

[ a b ] = [ 1 1 ] \begin{bmatrix}a \\ b\end{bmatrix} = \begin{bmatrix}1 \\ 1\end{bmatrix} [ a b ] = [ 1 1 ] Thus our line of best fit:

y = 1 x + 1 y = 1x + 1 y = 1 x + 1 The points and line of best fit are shown in the below graph: