pandas

pandas is a data analysis library for Python. It provides high-level data structures and analytical tools for data analysis.

Installation

pandas can be installed with pip:

$ pip install pandasor conda:

$ conda install pandasThe Dataframe

The core data structure in pandas is the DataFrame. A DataFrame is a container for holding tabular data (2D), and supports labelled rows and columns.

You can create a DataFrame by passing in a dict, where each key is a column name (of string type) and the value is a list containing the data for that column (one entry per row):

df = pd.DataFrame({ 'Name': [ 'John', 'Geoff', 'Brett' ], 'Age': [ 45, 23, 30 ], 'Height': [ 1.23, 4.56, 7.89 ],})You can then print the dataframe, and pandas will render the data nicely in a tabular form:

print(df)# Name Age Height# 0 John 45 1.23# 1 Geoff 23 4.56# 2 Brett 30 7.89Selecting Columns

You can then select (extract) certain columns of data by passing in a list of the column names you want:

print(df[['Name', 'Height']])# Name Height# 0 John 1.23# 1 Geoff 4.56# 2 Brett 7.8The command above returns a dataframe.

Selecting Rows Based On A Column Value

To select all rows in a dataframe in where a particular column has a certain value (filtering), use the following code:

df.loc[df['column_name'] == some_value]This returns a new dataframe with only the applicable rows included.

For example:

import pandas as pd

df = pd.DataFrame({ 'A': [ 1, 5, 6, 3, 4 ], 'B': [ 'foo', 'bar', 'bar', 'foo', 'foo' ]})print(df)# A B# 0 1 foo# 1 5 bar# 2 6 bar# 3 3 foo# 4 4 foo

filtered_df = df.loc[df['B'] == 'foo']print(filtered_df)# A B# 0 1 foo# 3 3 foo# 4 4 fooFor more advanced selection criteria, you can provide your own filter function, which takes one argument, the current row:

def filter(row): # This will filter out all rows where A is not an even number. if row['A'] % 2 == 0: return True else: return False

filt_df = df.loc[df.apply(filter, axis=1)]print(filt_df)# A B# 2 6 bar# 4 4 fooSorting

You can sort a dataframe by a specific column using the sort_values() function, providing a column name to the in parameter to specify what column to sort by:

df = pd.DataFrame({ 'A': [ 1, 5, 6, 3, 4 ], 'B': [ 'foo', 'bar', 'bar', 'foo', 'foo' ]})sorted_df = df.sort_values(by='A')print(sorted_df)# A B# 0 1 foo# 3 3 foo# 4 4 foo# 1 5 bar# 2 6 barBy default, pandas will sort in ascending order. To sort in descending order, provide the optional parameter ascending=False:

sorted_df = df.sort_values(by='A')print(sorted_df, ascending=False)# A B# 2 6 bar# 1 5 bar# 4 4 foo# 3 3 foo# 0 1 fooSaving and Loading CSV Files

pandas has first-tier support for CSV files. It can load in a CSV file directly into a DataFrame, ready for analysing, without having to write any line-by-line CSV parsing. It will also label the columns if the CSV file has a header row (which is recommended!).

To load a CSV file into a DataFrame:

df = pandas.read_csv('file_path.csv')Be warned that if you have whitespace between values in your CSV file, you may need to do some extra massaging to get the correct data. One easy way to this is to pass skipinitialspace=True into the read_csv() function:

df = pandas.read_csv('file_path.csv', skipinitialspace=True)This will work as long as the white space is always prior to value, and there is no whitespace between the end of the value and the separator (e.g. comma). This is normally the case if whitespace was used to visually align the columns in the CSV file.

Saving and Loading to Other File Formats

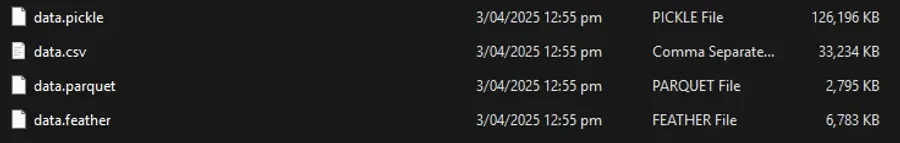

For large datasets, CSV files might become too large and slow to save and load. Pandas also supports a number of other file formats/serialization protocols, including pickle (Python’s built-in serialization protocol), Feather and Parquet.

To save and load to pickle, you can use the to_pickle() and read_pickle() functions:

df.to_pickle('file_path.pickle')df = pandas.read_pickle('file_path.pickle')Similar to pickle, you can use to_parquet() and read_parquet() to save and load to Parquet. Parquet is developed by Apache and is a open source, column-based data format designed for efficient data storage and retrieval.

df.to_parquet('file_path.parquet')df = pandas.read_parquet('file_path.parquet')Feather is also done in a similar fashion:

df.to_feather('file_path.feather')df = pandas.read_feather('file_path.feather')I did some basic tests with all of these formats and found the Parquet format to generate some of the smallest files and fastest save/load times. This is a placeholder for the reference: fig-dataframe-file-format-size-comparison shows a comparison of the file size of the dataframe file formats with a test dataset. This dataset contained approx. 170,000 rows and 100 columns. Many of the columns did not contain data for many of the rows, which might be why some of the advanced formats were able to compress the data so much.

Integration With Jupyter

pandas has good integration into Jupyter. It can render dataframes as formatted and styled HTML tables, either by typing the dataframe variable on the last line of a cell or by using the display(my_dataframe) syntax. When dealing with large amounts of data inside a dataframe, it will truncate internal cells (with ...) to limit the table height and width (similar to when you print a large numpy array). Typically you should always leverage Jupyter’s dataframe rendering ability, rather than using print(my_dataframe) (which just prints the dataframe as a string).

Merging Tables (VLOOKUP Equivalent)

Pandas provides the ability to merge tables together in a similar fashion to the VLOOKUP function in Excel, or similar to a JOIN in SQL.

The syntax for merge() is:

pd.merge(left, right, left_on, right_on, how)The different types of joins (the how parameter), which follow the same naming convention as SQL:

inner: Rows which have matching values in both tables.left: All rows in the left table plus those matching from the right.right: All rows in the right table plus those matching from the left.outer: All rows from both tables.